Interfacing the Brain & Machines: Another Step to a Cyborg Future

Controlling robotic prostheses with one’s mind has been a hot topic in research, but making a prothesis ‘talk back’ has been a stumbling block. Researchers from Switzerland show progress in creating sensations from a bidirectional neuroprosthesis.

Researchers from the University of Geneva, in Switzerland, are developing a new method to connect a prosthesis to neural activity in a bidirectional manner, which could solve some long-standing challenges of brain-machine interfaces. Published in Neuron, their early testing in mice models had promising results.

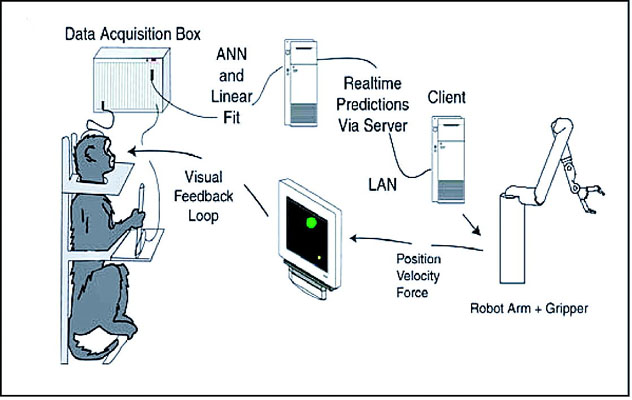

Brain-machine interfaces have been the focus of much research, not only for its potential in biohacking or a cyborg future, but also for concrete applications for paralyzed patients and amputees. Current attempts at mind-controlled prostheses rely on visual feedback: a robotic arm is controlled when a patient sees its position and adjusts movement accordingly.

The missing link is called proprioception, one of the less well-known senses outside the famous five. It refers to sensing a body part as one’s own and is important to positioning one’s body in space. So far, it has been inaccessible to prosthesis developers, but the back-talk technology described in Neuron approaches it.

The research team used an optical technique to stimulate brain activity that reflected prosthesis activation. The group tried to teach mice to control a neural prosthesis by relying only on this type of artificial stimulation. When the neuron coupled with the prosthesis activated, it was flashed with a blue light proportional to its activity – an ‘artificial sensation’. In 20 minutes, the mice learned to associate the sensation and the activity to respond and try to control a prosthesis.

This learning behavior was the necessary proof of concept that the artificial input could be integrated as a feedback of the prosthesis. There were also some valuable insights into the still mysterious world of neuroscience. For example, the researchers think that the relatively fast pace of learning might be because the technique taps into a very basic function of neurons. The study has also shown that the single neuron chosen for feedback could control the movement on its own, without recruiting neighboring neurons for the task.

The next challenge to tackle will be producing more complex and efficient sensory feedbacks, to deliver multiple inputs in parallel, for example. In addition to feeling an artificial’s limb position, creating sensations of touched objects and perceiving necessary force to grasp them could greatly improve the performance of such prostheses.

Written by Denise Neves Gameiro